GitHub Repository

GitHub Repository

Overview

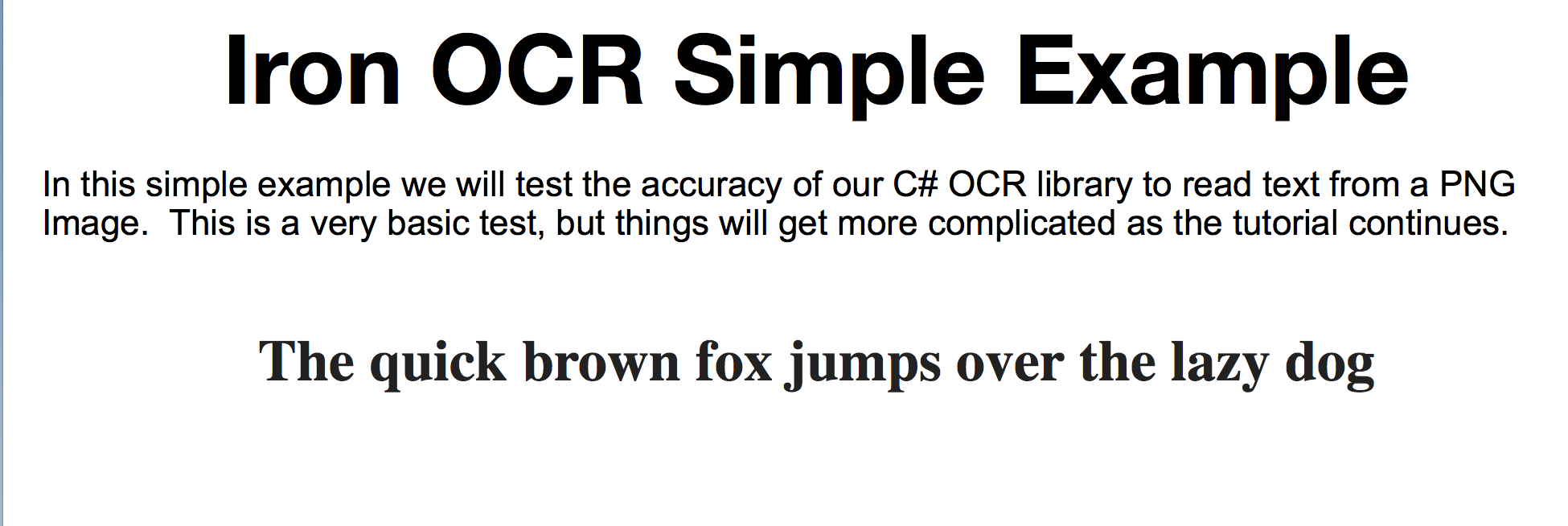

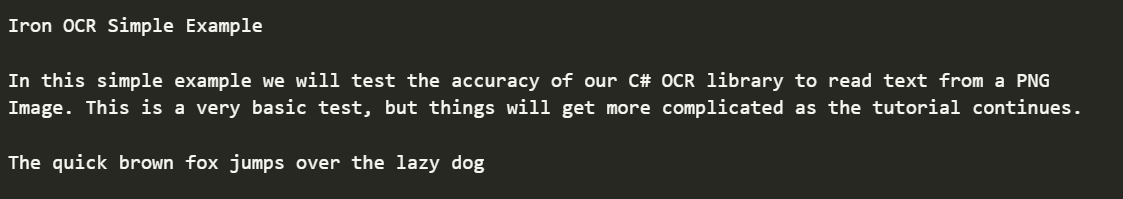

This project focuses on the design and implementation of an OCR system within the NAO humanoid robot. Using the Tesseract engine, the robot can recognize both printed and handwritten text from images captured by its camera. The recognized text is then converted into speech using the robot’s text-to-speech capabilities, enabling it to communicate the recognized information audibly.

Implementation Details

- OCR System Overview

- The OCR system uses Tesseract OCR Engine, an open-source tool developed by Google, to recognize text from images. Tesseract is integrated into the NAO robot, which captures images via its onboard camera.

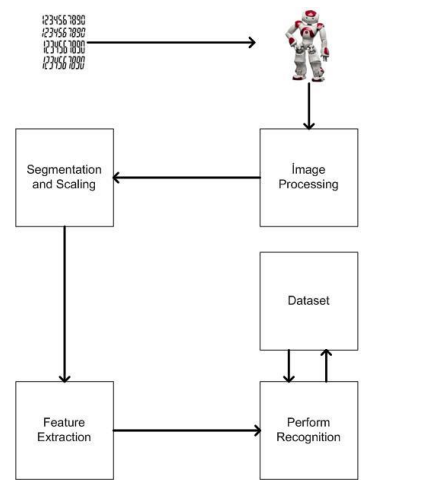

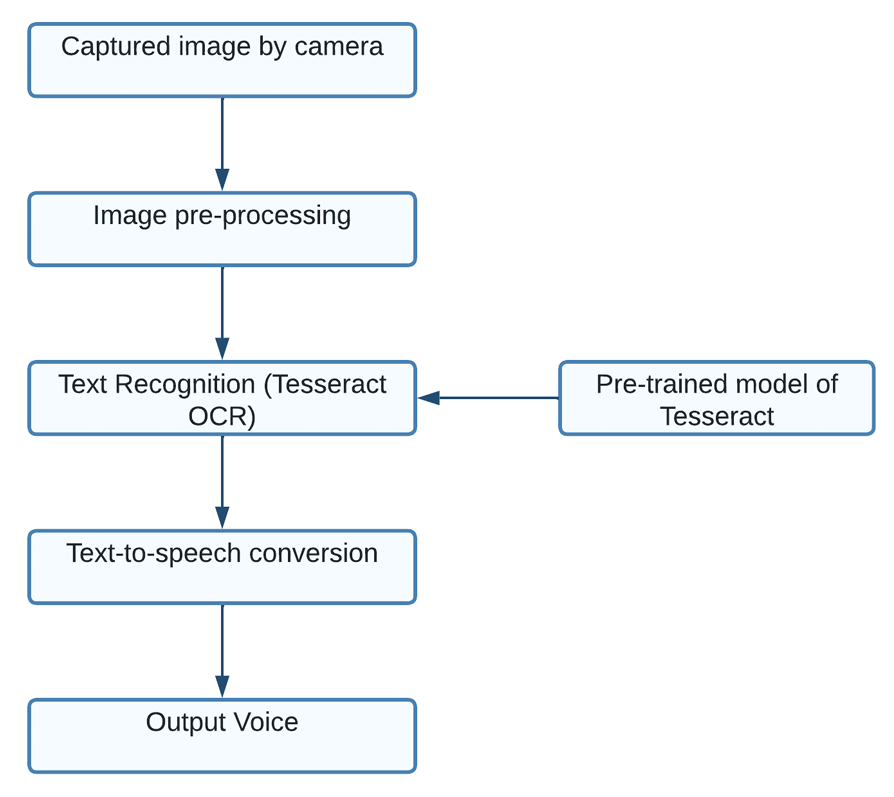

- System Architecture

- Image Capture: The NAO robot’s camera captures images containing handwritten or printed text.

- Image Preprocessing: Optionally, preprocessing techniques like resizing, noise removal, and binarization are applied to improve OCR accuracy.

- Text Recognition: The Tesseract engine processes the image and extracts the text.

- Text-to-Speech: The extracted text is converted to speech using the robot’s text-to-speech functionality, allowing the robot to “read” the text aloud.

-

Methodology

- Tesseract OCR is used in conjunction with the OpenCV library for image preprocessing (grayscale conversion, thresholding).

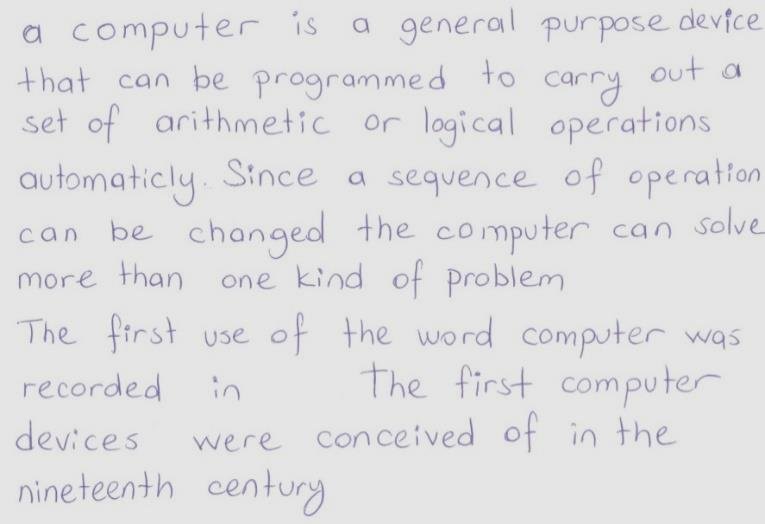

- The system is capable of recognizing both printed and handwritten text with a high degree of accuracy.

-

Integration with NAO Robot

- The OCR system is fully integrated into the NAO robot, utilizing its sensors, camera, and computational power.

- The robot can recognize and communicate text in real time, making it a versatile tool for educational, healthcare, and customer service applications.

Technologies Used

- NAO Robot: Humanoid robot platform developed by SoftBank Robotics.

- Tesseract OCR: Open-source OCR engine used for text recognition.

- OpenCV: Used for image preprocessing to enhance OCR accuracy.

- Python: The programming language used for integrating OCR functionality with NAO’s API.

- Text-to-Speech (TTS): Converts the recognized text into audible speech using the robot’s built-in TTS engine.

Results & Findings

- The OCR system successfully recognized both printed and handwritten text with high accuracy.

- Preprocessing steps such as thresholding and grayscale conversion significantly improved text extraction.

- The NAO robot demonstrated the ability to “read” text from images and communicate it audibly, showcasing potential applications in educational and interactive environments.

Future Improvements

- Optimize the system’s efficiency and speed by exploring parallel processing techniques.

- Enhance recognition capabilities for more complex handwritten text using advanced deep learning models.

- Explore the use of machine learning algorithms to improve OCR accuracy in diverse environments.

Contributors

- Imad-Eddine NACIRI

- Achraf Berriane

- Errouji Oussama